Building Anrok’s first LLM-powered feature

Using AI to save finance teams time without sacrificing accuracy

Everyone is talking about breakthroughs in AI and the disruptive new possibilities they present for startups and product teams. We at Anrok are big believers in that future. In addition to dreaming up the big new features that Large Language Models (LLMs) might unlock for finance users, we’re also exploring the more subtle improvements and new opportunities they present for day-to-day applications.

When it comes to making mundane workflows and complex objectives like tax compliance feel seamless, LLMs allow us to take on problems that would otherwise be cumbersome and time-intensive. Multimodal models, and in particular those that take an image as input and provide text as output (we’ll call this a Vision model), presents a compelling opportunity for automation.

Today at Anrok, we’re launching our first LLM-powered feature to do a small thing with big impact for our users: using Vision models to extract critical data from lengthy tax compliance documents.

What we’re solving for

Anrok provides an end-to-end solution for sales tax and global VAT compliance. Part of the challenge companies face with compliance is collecting and storing exemption certificates from their customers who are legally exempted from sales tax (typically non-profits, religious organizations, and government entities).

Hundreds of software companies rely on Anrok’s suite of features for exemption certificate management. However, there hasn’t previously been an easy way to extract relevant details from a given certificate—issue date, expiration, applicable tax jurisdiction, and so on—to store and record as discrete data.

Relying on manual input or even optical character recognition (OCR) technology left much room for error. This led us to explore how multimodal LLMs could improve the process of reading data from exemption certificate images to streamline finance teams’ workflows.

Why LLMs are more accurate than OCR

While tools and techniques like OCR exist for a similar purpose, they have a few disadvantages when compared to multimodal LLMs:

1. Contextual understanding

Traditional OCR techniques output a mangled block of text that can be hard for both humans and machines to parse. Compare the result of these two methods for a blank Texas Sales and Use Tax Exemption Certificate, as an example:

Original document

OCR result

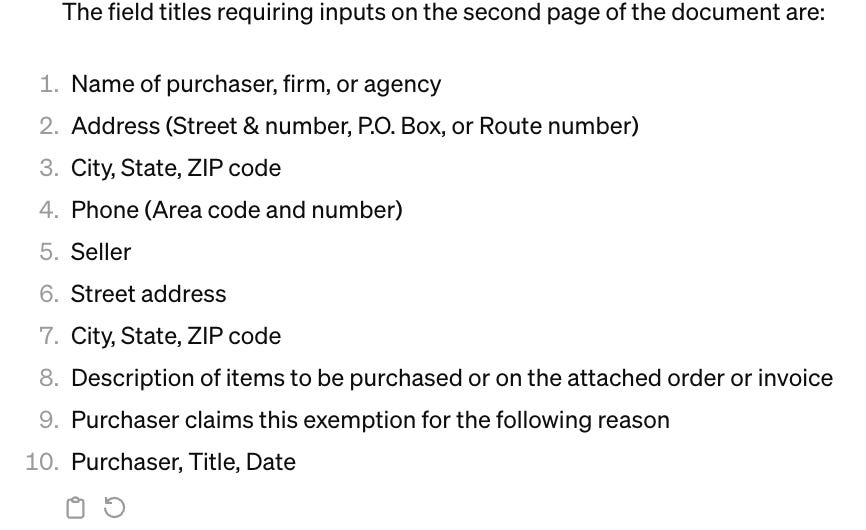

Vision model result

From the examples above, it is clear that OCR has become an antiquated technology. Beyond text extraction, Vision models allow us to focus on the relevant fields and interact dynamically with the data.

2. Flexibility

Traditional OCR typically relies on a set of standard document formats, such as a form where dates, names, and signatures are found in predictable positions on a page. This limits predictable OCR outputs to reading documents similar to those you designed for.

In our exemptions certificate use case, each state has their own document format (if not multiple) and non-standard text such as handwriting or images are prevalent. Governments can also change this document format at any time, without notice. The flexibility of an LLM to navigate around those elements and adjust to changing document structures is a huge win for predictability and accuracy.

3. Speed of development

Our team was able to build a prototype for extracting data from exemption certificates in a matter of hours with this new technology. This remarkable pace enables the team to focus on providing the best user interface and iterate quickly based on customer feedback.

Just the beginning

Our team initially envisioned this project as a way to experiment with LLMs—the new multimodal models in particular—and found both the process and result to be more effective than expected. We’re launching this feature today as a beta to help our customers reduce manual processing time and focus on the strategic problems that matter most to their business. Given the speed and ease of building our first AI-powered feature, we expect there will be more to come soon. ⊞